What is a DOE system

AI is powerful. But unreliable.

Ask it the same question twice. Get different answers.

That's fine for brainstorming. Terrible for business operations.

Then I found out about Nick Saraevs DOE systems.

Directive-Orchestration-Execution.

The Problem With AI Today

AI models are probabilistic.

Every response has variance. Even with temperature at 0.

This creates a compound problem:

- 90% accuracy per step

- 5 steps in a workflow

- 0.9 × 0.9 × 0.9 × 0.9 × 0.9 = 59% success rate

That's why AI "agents" fail on complex tasks.

Each step introduces error. Errors compound. By step 5, you're flipping a coin.

The Solution: Separate Thinking From Doing

Here's the insight:

AI is good at decisions. Code is good at execution.

So don't make AI do both.

Split them.

THINKING (AI) DOING (Code)

────────────── ──────────────

Understand intent API calls

Route to right tool Data processing

Handle edge cases File operations

Learn from errors Consistent output

AI decides what to do. Code does it.

Now your success rate is:

- AI routing: 95% accurate

- Code execution: 99.9% reliable

- Combined: 95% success rate

Much better than 59%.

The 3-Layer DOE Architecture

Layer 1: Directives (What To Do)

SOPs written in plain language. Markdown files.

They tell AI:

- What the goal is

- What inputs to expect

- Which tools/scripts to use

- What output to produce

- How to handle edge cases

Think of it like training a new employee. You write down the process. They follow it.

Example directive:

# Audit GTM Container

## Goal

Analyze a GTM container and report issues.

## Steps

1. List all tags using gtm_list_tags

2. Check each tag for:

- Missing triggers

- Consent Mode compliance

- Naming conventions

3. Generate report using audit_report.py

## Edge Cases

- If container has 100+ tags, paginate

- If API rate limited, wait 60 seconds

Layer 2: Orchestration (Decision Making)

This is the AI layer. Claude Code in my case.

It reads directives. Makes decisions. Routes tasks.

What it does:

- Understands user intent

- Picks the right directive

- Calls scripts in the right order

- Handles errors gracefully

- Updates directives with learnings

What it doesn't do:

- API calls directly (uses scripts)

- Data processing (uses scripts)

- File manipulation (uses scripts)

AI is the manager. Not the worker.

Layer 3: Execution (Doing The Work)

Deterministic Python scripts.

They do one thing. They do it reliably. Every time.

Characteristics:

- Single responsibility

- Clear inputs and outputs

- Error handling built in

- Testable

- Fast

Example:

# execution/monitor/data_flow_validator.py

def validate_client(client_slug):

"""

Validate tracking for a single client.

Returns structured result. Always.

"""

config = load_config(client_slug)

results = run_playwright_checks(config)

return format_results(results)

No AI in here. Just code that works.

Why This Architecture Wins

1. Reliability

AI errors don't cascade. If AI picks wrong script, script still runs correctly. Easy to debug.

2. Speed

Scripts are fast. No LLM inference for execution. AI only involved in routing decisions.

3. Learning

When something breaks:

- Fix the script

- Update the directive

- System is now stronger

Each error makes the system better. Compound improvement.

4. Transparency

Directives are readable. Anyone can see what the system does. No black box.

5. Portability

Switch AI models anytime. Directives work with Claude, GPT, Gemini. The logic lives in markdown, not prompts.

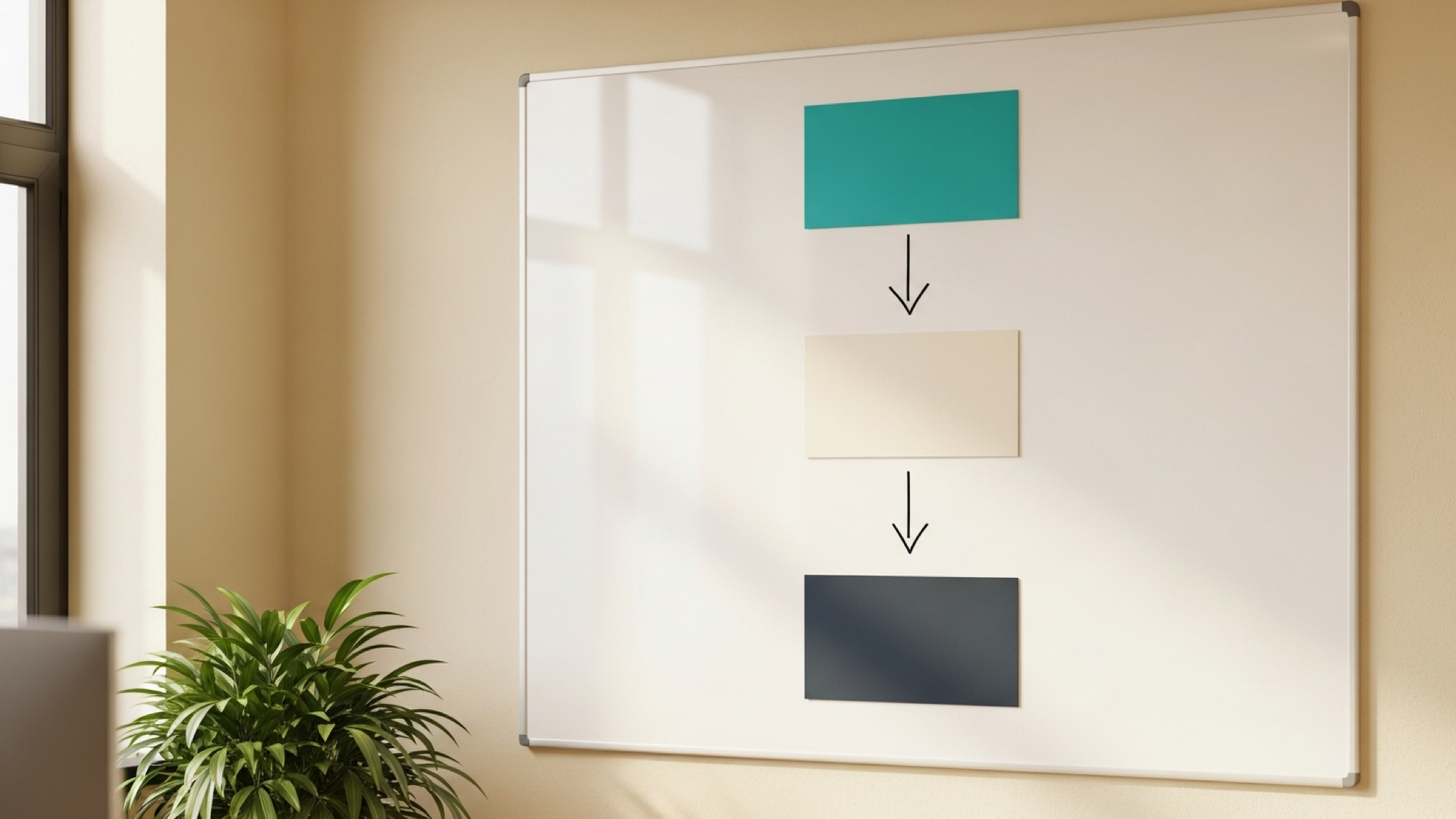

The Self-Annealing Loop

This is where it gets interesting.

Every error is a learning opportunity.

Error occurs

↓

Fix the script

↓

Update the directive

↓

Log the learning

↓

System is stronger

↓

Error never happens again

After 6 months, the system has solved problems you didn't know existed.

It's like compound interest for reliability.

Real-World Example: Tracking DOE

I built Tracking DOE using this architecture.

What it does:

- Monitors marketing tracking (GA4, Google Ads, Meta, etc.)

- Audits GTM containers

- Sends alerts when tracking breaks

- Learns from every issue

The layers:

| Layer | Implementation |

|---|---|

| Directives | directives/gtm-audit.md, directives/troubleshooting.md |

| Orchestration | Claude Code with Stape GTM MCP |

| Execution | Python scripts in execution/monitor/ |

When I ask "audit my GTM container":

- Claude reads

gtm-audit.mddirective - Decides which scripts to call

- Scripts fetch data via GTM API

- Scripts analyze and format results

- Claude presents findings

AI thinks. Code works. Results are consistent.

How To Build Your Own DOE System

Step 1: Identify Repetitive Tasks

What do you do repeatedly that could be automated?

- Data processing

- Report generation

- Monitoring

- Auditing

- Onboarding

Step 2: Write Directives

For each task, document:

- Goal

- Steps

- Tools needed

- Edge cases

- Expected output

Keep them in markdown. Simple and readable.

Step 3: Build Execution Scripts

One script per atomic action.

fetch_data.pyprocess_data.pygenerate_report.py

Make them deterministic. Test them.

Step 4: Connect With AI

Use Claude Code, Cursor, or any AI coding assistant.

Point it at your directives. Let it orchestrate.

Step 5: Iterate

Every error → update directive → stronger system.

DOE vs Traditional Automation

| Aspect | Traditional | DOE System |

|---|---|---|

| Flexibility | Rigid flows | AI adapts to context |

| Reliability | Script-dependent | Code execution reliable |

| Learning | Manual updates | Self-improving |

| Edge cases | Break the flow | AI handles gracefully |

| Maintenance | High | Low (self-documents) |

DOE gives you the best of both worlds.

AI flexibility. Code reliability.

When NOT To Use DOE

DOE is overkill for:

- One-off tasks

- Simple automations (use Zapier)

- Tasks with no edge cases

- Low-stakes operations

Use DOE when:

- Task has many variations

- Errors are costly

- You want compound improvement

- Multiple people need to use it

Getting Started

Want to see DOE in action?

Check out Tracking DOE - my implementation for marketing tracking.

It's open source. Fork it. Adapt it to your use case.

The architecture transfers to any domain:

- Sales operations

- Customer support

- Content creation

- Data pipelines

- DevOps

Same pattern. Different directives and scripts.

Summary

DOE = Directive-Orchestration-Execution

- Directives: What to do (markdown SOPs)

- Orchestration: AI decides and routes

- Execution: Code does the work

AI thinks. Code works. System learns.

That's how you make AI reliable.